BACKGROUND

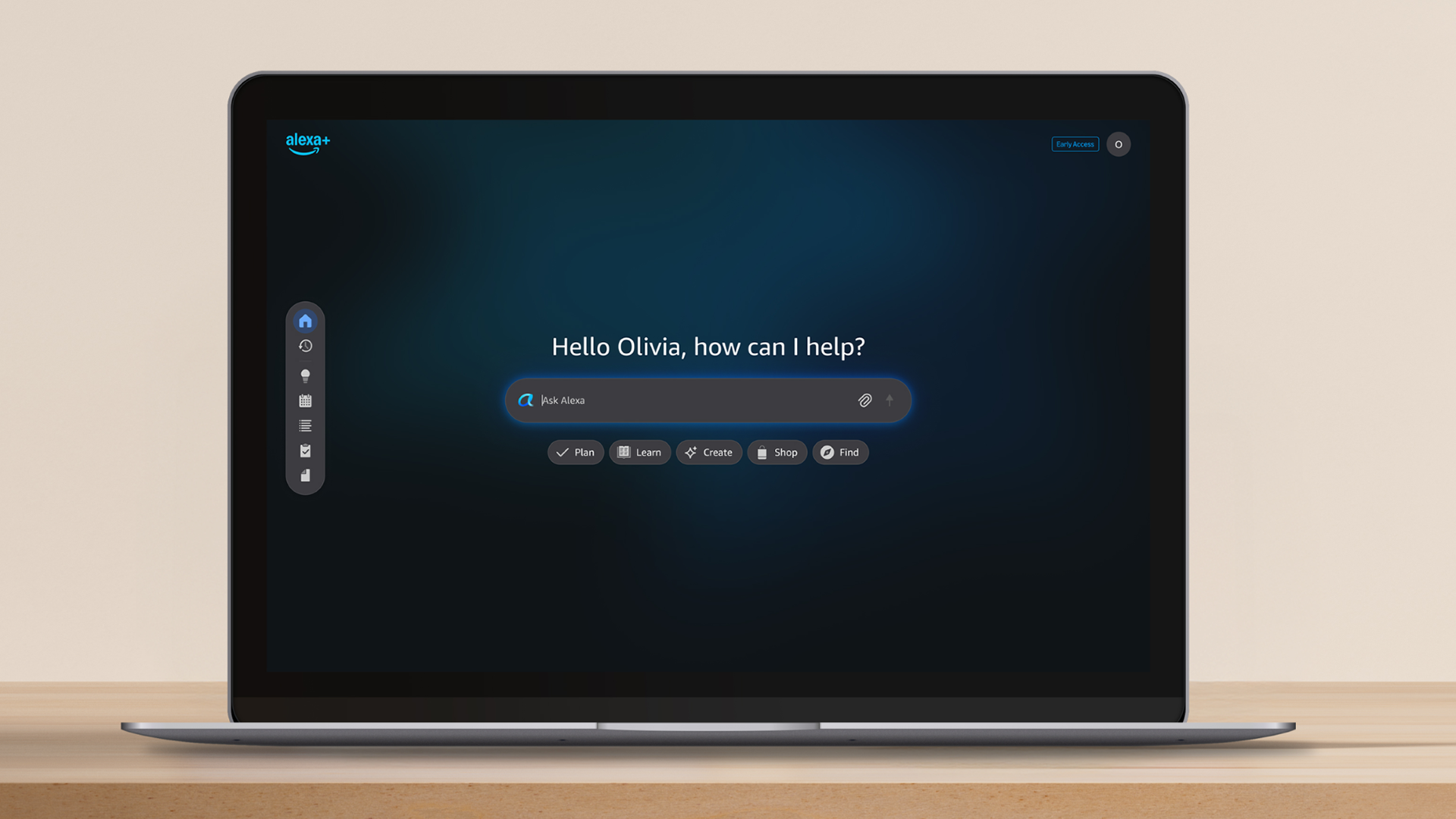

Alexa+ is a reimagined conversational AI experience designed to feel more responsive, intelligent, and human across platforms. As the product evolved, motion became a key tool for communicating system behavior, guiding attention, and visualizing otherwise invisible AI interactions. This work focused on defining motion patterns and visual behaviors that could scale across surfaces while supporting emerging, interaction-driven experiences.

THE CHALLENGE

Early product concepts and system architecture were still forming, making it challenging to visualize how users would understand system state, responsiveness, and intent—especially in conversational and AI-driven interactions. Motion behaviors varied across features, and there was no shared interaction language to support future platforms or more spatial experiences. The challenge was to translate abstract product ideas, user stories, and evolving flows into clear, reusable motion patterns that could ground the experience.

Early motion prototypes showing interaction patterns

MY CONTRIBUTIONS

I led the exploration and definition of motion interaction patterns for product UI across Alexa+ for web and the Alexa app. I worked alongside UX designers, researchers and product teams as user stories and system architecture were being developed to establish the motion framework. I translated ambiguous concepts, wireframes, and narratives into motion prototypes that clarified interaction, hierarchy, and system feedback.

I defined and iterated on a motion design system that established interactive patterns, timing, easing, and spatial behaviors, enabling consistency as the design system evolved. A core focus was visualizing conversational AI behavior—using motion to express listening, processing, and response states in a way that felt intuitive and human.

In parallel, I supported product storytelling through high-fidelity motion explorations and concept vignettes, helping teams visualize how the experience could scale into more immersive and spatial contexts.

CONCLUSION

The motion system established a foundation for expressing conversational AI behavior through motion. By defining how the system listens, processes, responds, and transitions between states, motion made intelligent behavior more legible and trustworthy to users.

Research shows that clear system feedback and responsive animation can improve user comprehension and perceived responsiveness in conversational interfaces by 20–30%, particularly during moments of latency or uncertainty. Guided by this insight, the system used timing, rhythm, and spatial change to visualize abstract AI behaviors—such as intent recognition, confidence, and reasoning—without relying on explicit UI.

This approach created a scalable motion language that supports consistent AI interactions across platforms while remaining adaptable to more immersive and spatial experiences, including future AI interfaces.